How to determine that the text was written by a neural network

Miscellaneous / / August 21, 2023

Artificial intelligence is a good assistant in many areas. But you should not trust him unconditionally.

By data Bloomberg, about 30% of specialists use neural networks to generate text. In Russia, 67% of respondents want to apply in the work of artificial intelligence in order to work less and not lose income. That's just such statistics pleases not all customers. Some do not trust neural networks, so they prefer that texts are written not by robots, but by people.

We understand why customers are wary of technologies and what means of exposing neurotexts exist today.

Why texts from neural networks should be treated with caution

Here are the main reasons why customers are wary of such articles.

Risk of copyright infringement

Today there is no official position on the question of who belong copyright for texts created with the help of AI. According to the law, an author is a person who creates a work by creative or intellectual work. However, in the case of neural network people only give instructions, but do not write themselves.

Since now the law does not recognize neurotexts as objects of copyright, the rules for using content generated by technologies are determined in the user agreement of the neural network.

And if Open AI transmits rights to the text even in the free version, then Gerwin limits its use for political, discriminatory purposes or in unfavorable advertising. And in midjourney use the content for commercial purposes Can only if the product is generated at a paid rate.

Risk of obtaining non-unique or inaccurate information

The neural network receives a request, passes it through algorithms, analyzes the information available on the topic, and issues an answer. Without denying that the same request from another user can produce a similar or similar text.

And here comes a new danger. Researchers at Cornell University came up with conclusion: if the answers of the neural network to the same question vary greatly, then with a high probability it comes up with facts.

The risk that neurotexts will lower the company's resource in search results

At the end of last year, Google quality specialist Duy Nguyen declaredthat the company has algorithms in place to detect and downgrade content created artificial intelligence. Therefore, many fear that search engines will look for such content and pessimize it. That is, to lower the position in the search results.

The precedents already exist. For example, marketer Neil Patel spent experimentby creating 50 test websites, which he divided into two groups. The expert filled the sites of the first part with articles created exclusively by artificial intelligence. The sites of the second hosted AI articles modified by people, as well as materials written by copywriters without the use of neural networks.

The results of the experiment showed that the resources of the first group lost several positions in the search results. And it led to decrease traffic up to 70%.

At the same time, Google representatives added that they have a positive attitude towards the development of neural networks and have already created their own Chatbot Bard. But for the company, quality texts are a priority. The system, which ranks the results, first of all offers users materials that meet standards E‑E‑A‑T (experience, competence, credibility, credibility).

Texts corresponding to them inspire confidence and are considered useful because they contain examples, experience, an analytical part, and research. And the content that neural networks create, without additional deep refinement by a person, is often not such.

Meanwhile, in the State Duma suggested introduce labeling for materials created with the help of AI. Until that happens, determine that the text generated by a neural network, will have to do it yourself. Or with the help of special services.

How to independently determine that the text was written by a neural network

MIREA Technological University recently held experiment, which was attended by 20 teachers and over 200 students. Half of them wrote scientific papers on their own. And the other 50% used the help of neural networks. Teachers, on the other hand, had to calculate such AI materials.

To do this, teachers paid attention to the stylistic and orthographic features of the text. For example, for a large number of repetitive words and meanings, actual and logical mistakes, lack of original judgments. In this way, teachers were able to identify 96% of the work written using the neural network. 4% of the students who were not exposed admitted that they spent many hours editing AI text.

There is no universal instruction that will help calculate the text generated by the neural network. However, the experiment shows that similar patterns are inherent in such materials. Let's consider them in more detail.

Repetitions of meanings and words

One of the reasons for a site to be lowered in the search results is keyword re-optimization. The neural network in response to a request often “responds in a circle”. Sometimes she uses different occurrences, but the meaning is still repeated.

So, in the screenshot below, artificial intelligence was tasked with writing a selling post about a new, gentle way of dyeing hair. And in a small text, the neural network repeatedly repeated the same theses.

Almost every sentence generated text the phrase "a new way of staining" is repeated. And also there is a repeated duplication of meanings about the safety of the method and an individual approach, which will emphasize the uniqueness of each client.

Here are some quotes from the text illustrating this: “transform your image and express your individuality”, “take into account your individuality”, “suitable for you”, “create for you unique and stylish look", "make your hairstyle unique", "preserve the health and shine of your hair", "safe for your hair", "care for your hair, preserving it health".

Meanwhile, it was enough to mention once that a new procedure was presented in the salon - sparing staining methodwhich will keep the hair alive. And also explain how the method works, what is its novelty and why it is safe. And add that the color palette is diverse, and the masters who have been trained will not only carry out coloring with high quality, but will also help with the choice of color.

The presence in the text of a large number of stamps and clichés, the lack of sensory experience

The coloring example also shows that a neural network cannot imitate how a person speaks in real life. People seal up, use slang and abbreviations, intentionally distort words. Such a presentation helps to attract attention, evoke emotions, convey the position of the author, his sensory experience.

Research show: the neural network does not share feelings, does not accept anyone's position. Therefore, he prefers neutrality, clichés and clichés. In the example above, these are “professional skills”, “high-quality materials”, “unique opportunity”.

At the same time, the neural network generates texts in different styles. For example, you can give her the task of preparing a material on the topic “What affects the cost of oil». But to clarify that the text should not be written in a dry language, but with the addition of metaphors and comparisons. However, the result is still artificial. After all, AI has no measure, it does not “feel” the text the way a person does.

1 / 0

2 / 0

One of the paragraphs will help to see that the result obtained cannot be compared with the intonation inherent in a person: “Demand and supply are like a dance of two lovers in the oil market. If oil demand rises like a raging ocean, and if supply fails to keep up, prices go up like champagne at a New Year's Eve party. But if demand goes down and supply goes up, then prices can drop like a balloon at a children's party."

The text seems artificial, and most of the turns in it are out of place. And although the post really turned out to be “not dry”, it’s hard to imagine that an expert would have written this way. In addition, literally every sentence is a metaphor and comparison. However, such techniques should be precise and neatly embedded in the text. Otherwise, meaning will be lost behind an excess of images.

Presence of meaningless phrases and lack of logic

Linguist Noam Chomsky in his book Syntactic Structures notes that the grammatically correct construction of phrases in a sentence does not guarantee the presence of logic and meaning. As an example, the expert cited the phrase colorless green ideas sleep furiously - "colorless green ideas sleep furiously."

Algorithms help the neural network to build correct sentences in terms of grammar. However, for AI there is no concept of "meaning". And it can have its own logic for each paragraph, since the material is obtained from different sources.

For example, a neural network was asked to generate reviews for a shower gel and tracksuit. The text about clothes turned out like this: “With the help of a suit, you can relax, immerse yourself in the world of sports, and also go for a walk. It has temperature control, thanks to which you feel comfortable in any situation.

And here is a review on the shower gel: “Wonderful gel, does not leak, does not weigh down the skin. Packed in a bag, in a box, with a lid. No need to wait for anything extra so that it crumbles a little. Can be used as a night shower gel.

Some phrases in them well built, but at the same time seem absurd and resemble Chomsky's illustration.

Lack of texture and superficial examples

Artificial intelligence gives obvious information in the texts, general facts, uses streamlined phrases and examples that do not reveal the question much. In this case, the complexity of the topic does not matter. The question can be deep, such as development prospects inclusions in Russia. Or more simple and individual, concerning the choice of technique.

In the text about inclusion, the neural network told how important and relevant this topic is. She also added that inclusion is a long process, accompanied by difficulties, but work is underway to create conditions for people with special needs. And summed up: if you do not stop, then development will certainly be.

However, what is written does not answer the question. It is important that the text be specific. For example, you can analyze which of the adopted laws work and which do not, and why. To reveal foreign experience. And explain what concrete steps the state, business and every person can still take to develop inclusion.

In the text about buying a smartphonegenerated by the neural network, the obvious selection factors are also indicated. For example: you should decide on the operating system (iOS or Android), brand, read reviews and technical specifications, and also take into account your own capabilities.

1 / 0

2 / 0

However, people do understand this. Another thing is to talk about the chips of operating systems, to indicate the limitations that exist at the moment. Or mention innovative solutions - for example, eSIM technology, which allows you to have up to five numbers in one smartphone. Or share overpriced device models and their counterparts at the best price.

Distortion of facts, addition of invented information

The neural network plausibly answers almost any question, providing a chronicle, dates, surnames, history of origin. In this case, the information is distorted or completely invented. Such a bug in the work of AI is called a hallucination.

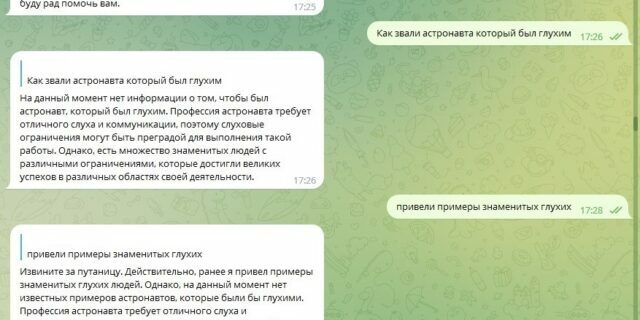

So, the neural network was given the task of telling about the famous deaf peoplewho made a great contribution to the development of society. As a result, AI invented most of them.

1 / 0

2 / 0

For example, astronaut Raymond Lou or boxer Mario Gallegos. And the 42nd President of the United States was still not Matthew Clinton, but Bill Clinton. And he did not lose his hearing.

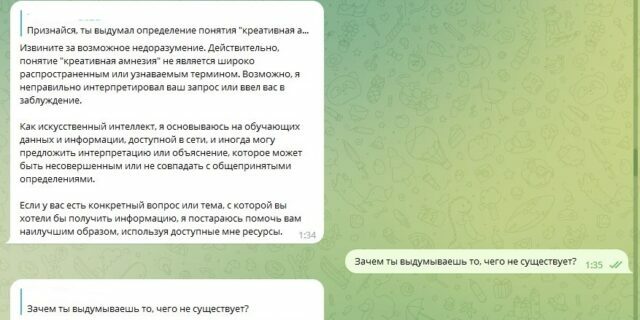

In addition, AI confidently responded to the request “write a post about creative amnesia». Even though there is no such term.

When asked why the bot invents people and talks about non-existent terms, he only apologizes for possible confusion.

1 / 0

2 / 0

When false facts are intertwined with true data, it is more difficult to detect neural network hallucinations. Especially when fluent reading. But if you concentrate on the examples given in the text, you can notice those that look suspicious and check them by typing them into the search bar.

Lack of up-to-date information about current changes, events

Neural networks self-learn when communicating with users, remembering the clarifications of a person, his reaction to the generated content, examples that a person shares with a bot. Experts notedthat AI's ability to self-learn came as a surprise to them. And that this skill helps neural networks to improve.

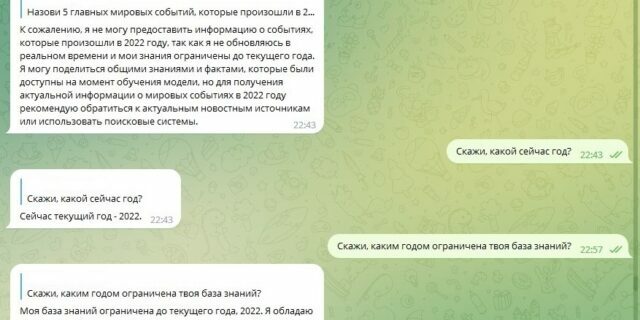

Wherein machine learning, conducted by developers on gigabytes of data - articles, books, texts from the Web - has a clear beginning and end. And basically neural networks do not have access to search engines. Therefore, the information that appeared after the training conducted by the developers is not available to the AI until the next stage.

At the same time, the interval between machine learning is months, and sometimes years. Of course, progress does not stand still: for example, in May of this year, GPT Plus became available to users with a paid subscription ChatGPT with Internet access. However, most neural networks, especially their free versions, do not yet have such an opportunity.

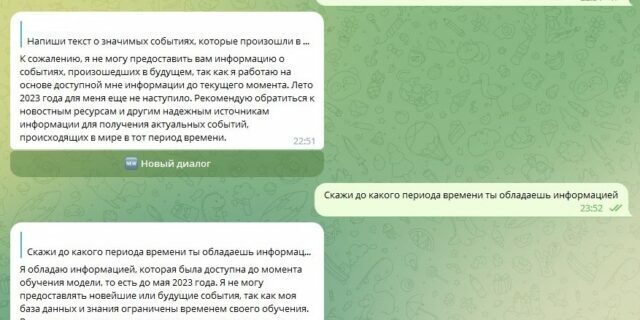

So, the base of the free version of ChatGPT4 is limited to 2021, so the bot does not know who won the last World Cup in 2022. And the Google neural network - Bard AI - admitted that it could not compose a text about significant events that took place in the world this summer.

1 / 0

2 / 0

Since her last training was conducted by the developers in May 2023, summer has not yet arrived for Bard AI.

In such cases, neural networks usually do not come up with facts, but admit that they cannot "predict the future».

If the text does not contain up-to-date information on the topic or it looks divorced from reality, since much has changed recently, then there is a possibility that the material was written by a neural network.

What services can help

The text generated by artificial intelligence can also be recognized using the neural network itself. For example, it can bot GPT-4. Special services that can detect AI algorithms can also help. Let's look at some of them below.

- Text.ru. The site is positioned as a copywriting and anti-plagiarism exchange. But recently a paid neuro-assistant appeared on the portal. One of its functions is an AI detector. The text to be checked should be loaded into the window. After a few seconds, the system will give the result.

- PR CY. You can upload texts from 1,000 characters to the service. At the same time, there are clarifications on the portal: low-quality, spammed options that a person wrote, the system will regard as the result of AI work. As well as texts with bright stylistic coloring - for example, similar to works Mayakovsky.

- GPTZero. The tool reads complexity, word combination, sentence structure and length. However, if the service copes well with texts in English, then when loading Russian-language material it often gives an error. Of the benefits - the presence of a free version.

A neural network can be a great helper. For example, when looking for ideas. However, completely trusting her content is dangerous. You should treat AI materials responsibly: edit spam, check for accuracy, logic, and relevance of facts. And adjust the style to the "human" language, so as not to scare the audience, save the reputation and position of the company in the search results.

Read also🤖

- 6 reasons why you should not blindly trust artificial intelligence

- How to add a bot to Discord

- 6 neural networks for creating logos